loading...

The impact of propaganda on public opinion, the social network administration’s response to complaints, and the legal aspects related to the spread of foreign propaganda on an American platform — MOST Media & News has examined every side of this issue

August 10, 2025

Since February 2022, Russian propaganda has launched a large-scale information campaign to justify the invasion and undermine international support for Ukraine. A central narrative was the rhetoric of “denazification” — claims that the country was ruled by Nazis and that Russia was forced to purge it of extremists. This message was accompanied by hate speech and the dehumanization of Ukrainians, including ethnic slurs, to justify violence. At the same time, the Kremlin promoted the notion that Ukraine is a puppet of the West, and that NATO expansion and U.S./EU “aggression” compelled Russia to launch a “special operation.” Facebook was also used to promote conspiracy theories — for example, about secret U.S. biolabs in Ukraine or claims that mass killings (such as the Bucha massacre) were staged.

While many investigative journalists shifted their focus to examining the activity of pro-Russian groups on X (Twitter) and Telegram, Russian propagandists were developing their methods of promotion on other social platforms — most notably, on Facebook.

Our investigation revealed that a coordinated network of fake accounts and pages on Facebook artificially boosted post engagement through likes, comments, and shares. This allowed propaganda to bypass the platform’s algorithms and amplify its influence on Western audiences, including American voters.

A particularly sophisticated form of promoting pro-Russian propaganda on Facebook involved posts — and even entire pages — dedicated to showcasing Russian weapons systems and their so-called “technical superiority”, as well as visual content featuring cultural and historical landmarks with AI-generated elements added. Such posts create a “soft power” effect (Joseph Nye, Soft Power: The Means to Success in World Politics), aimed at shaping a positive perception of Russia abroad. Visually appealing scenes could be accompanied by propagandistic captions or symbols, reinforcing the core narratives of the campaign and aiding their spread among various segments of Western audiences. Some of the identified posts were deliberately adapted to fit the U.S. political context, potentially influencing American voters’ preferences — directly or indirectly — especially in the run-up to the elections.

Such posts not only undermine support for Ukraine and fuel anti-NATO sentiment, but could also have influenced the most recent U.S. presidential election by shaping public opinion on key issues of international policy.

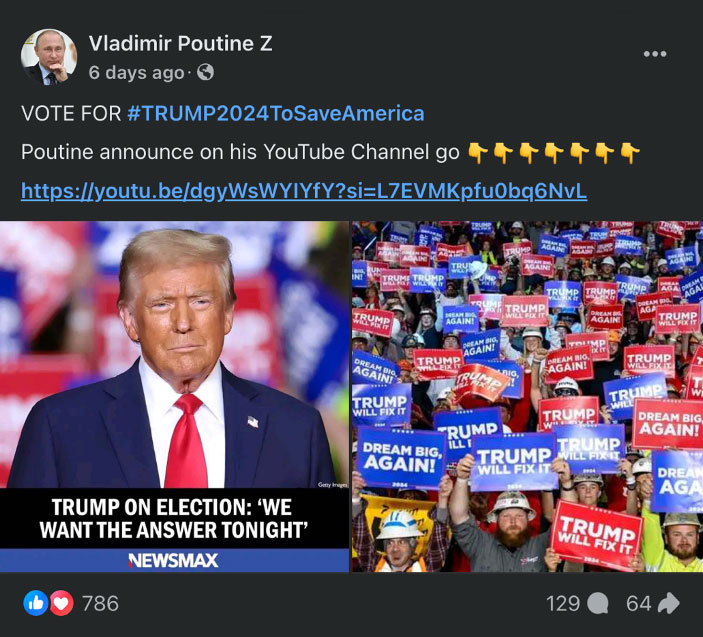

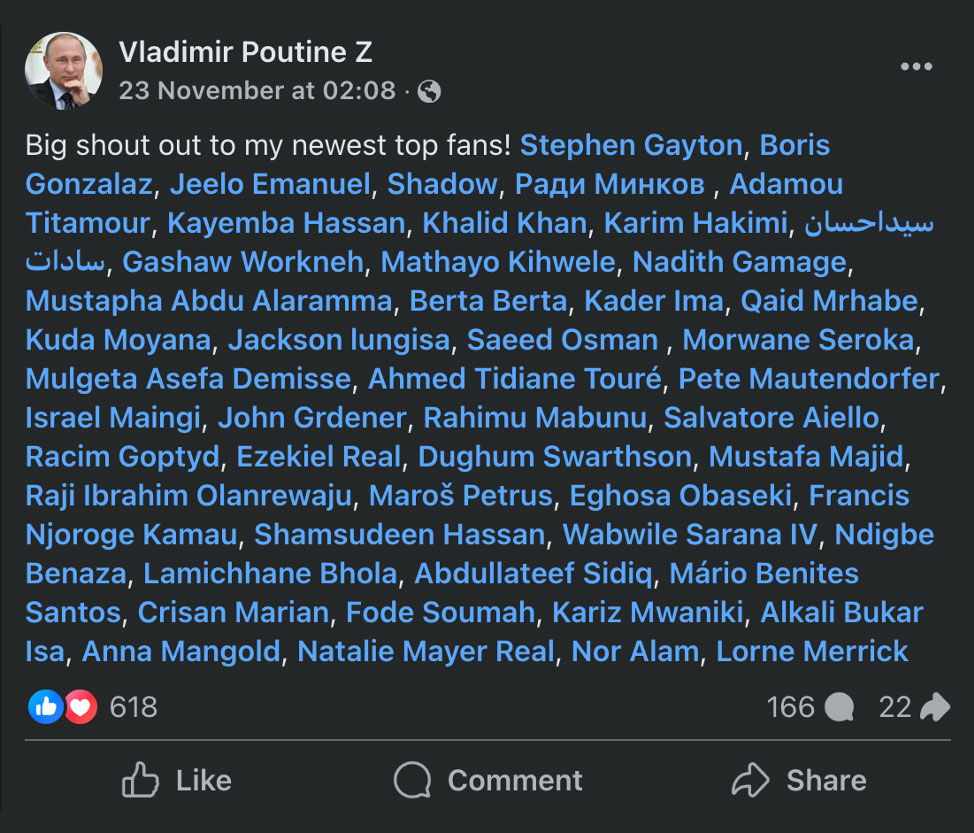

Example from the profile Vladimir Poutine Z: covert manipulation of public opinion through pro-Trump messaging. While such posts appear to be internal political content, they become part of a broader foreign influence campaign through the use of pseudonymous accounts like this one.

Calls to end military support for Ukraine in 2023–2024 became a point where Kremlin rhetoric intersected with Trump’s campaign statements. According to political communication expert and professor Sarah Oates, the 2024 U.S. elections were among the most significant for Russia, as a substantial reduction in U.S. aid to Ukraine was highly likely if Trump were to win. Russian propaganda actively fueled sentiments in favor of such an outcome. On Facebook, this manifested in the emergence of content aimed at American conservatives and Trump supporters.

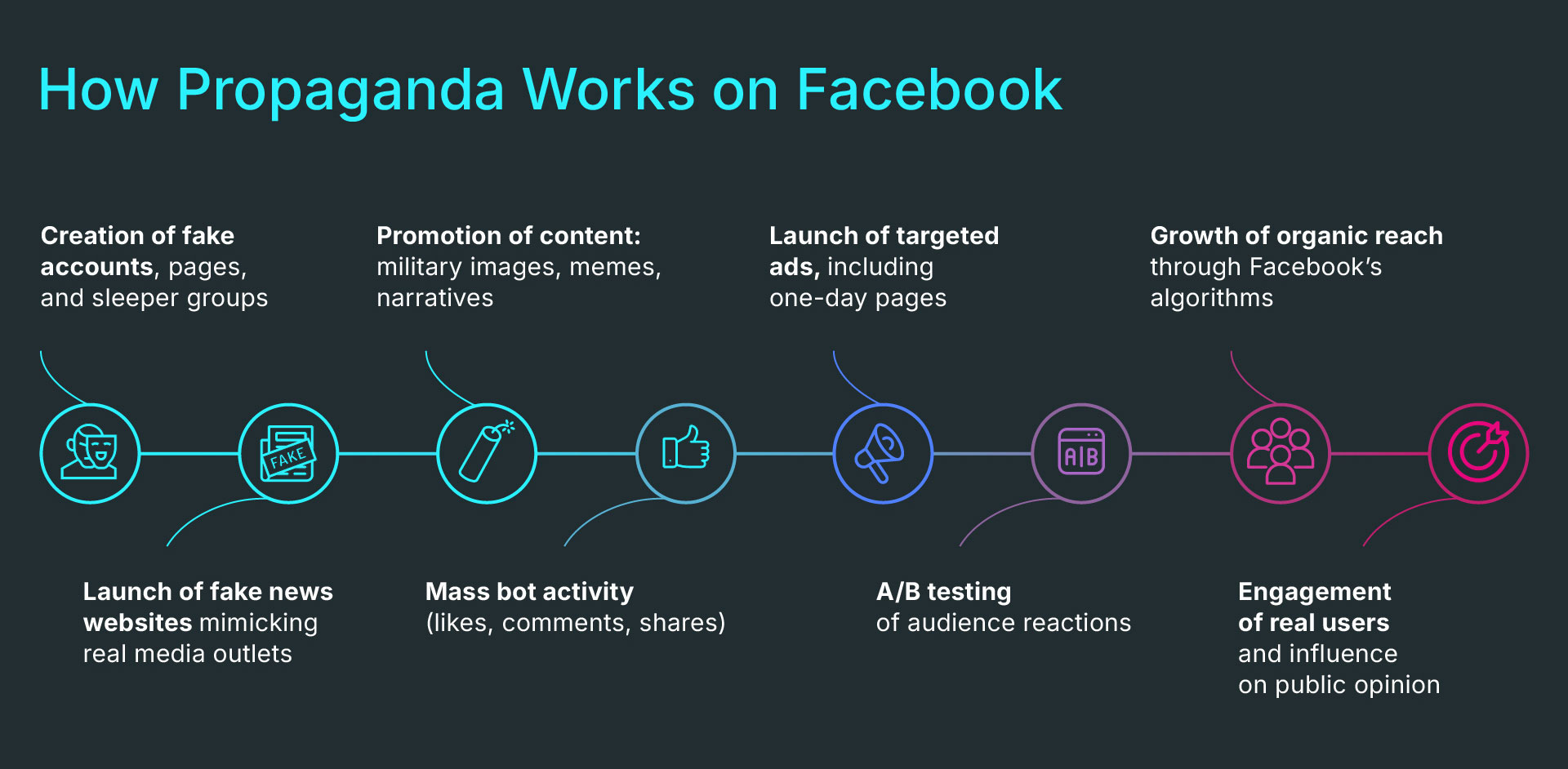

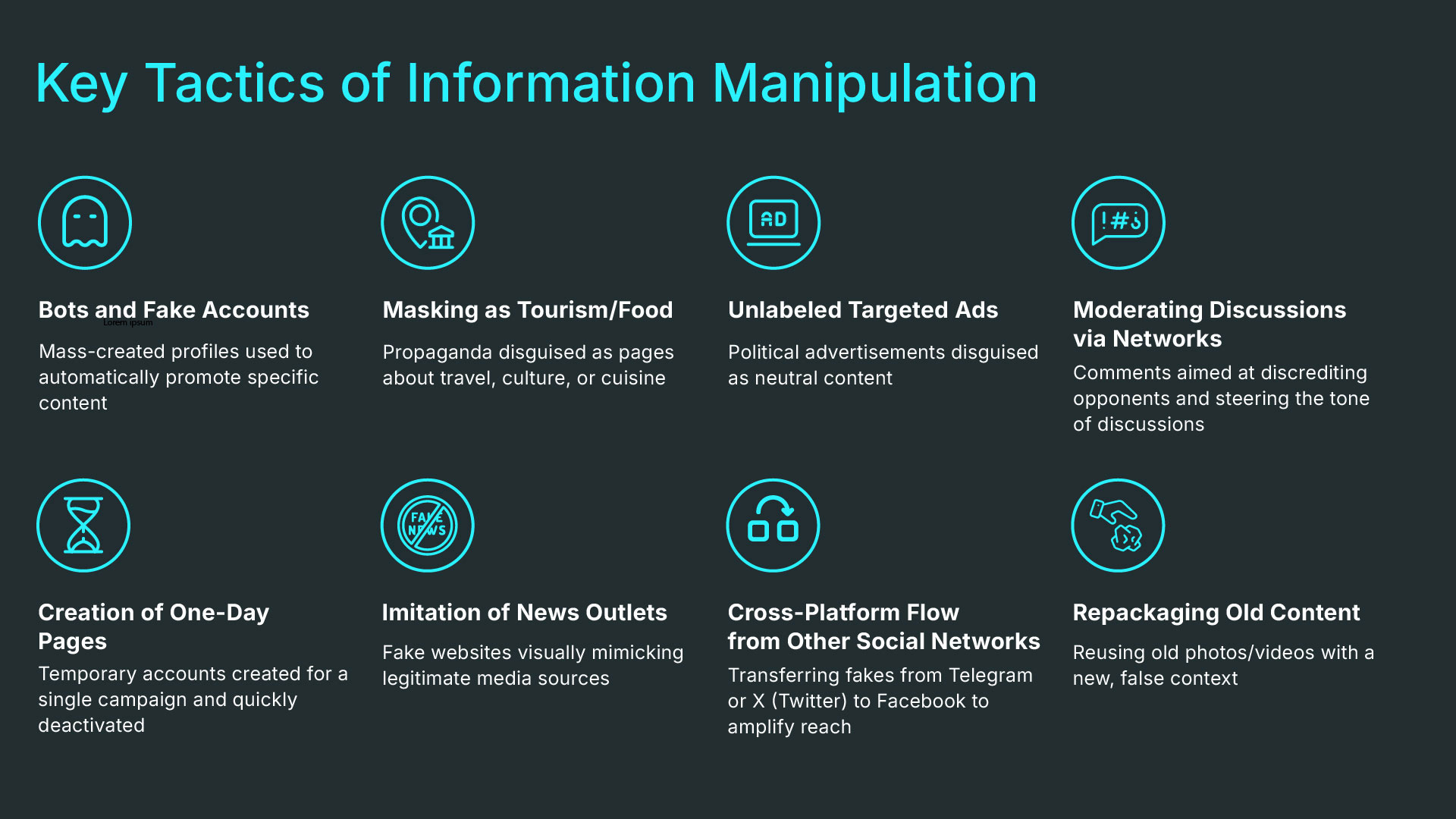

To promote their content on Facebook, pro-Russian actors employed a broad arsenal of tactics, often exploiting vulnerabilities in the platform’s algorithmic mechanisms. In Meta reports, such coordinated operations are classified as CIB (Coordinated Inauthentic Behavior). Below are the key methods identified since 2022.

As in previous years, the foundation of these operations consisted of thousands of fake profiles posing as ordinary users or page administrators with shared interests. Meta had repeatedly exposed and blocked such networks in the past. For example, in 2022 it uncovered the largest Russia-linked network since the start of the war: more than 1,600 fake accounts and pages that coordinated the spread of Kremlin war propaganda. This campaign, launched in May 2022, included over 60 fake news websites imitating well-known outlets (Spiegel, The Guardian, Bild, and others) in multiple languages. After publishing pro-Kremlin content (smearing Ukraine, discrediting refugees, claiming that sanctions were ineffective and only harmed the countries imposing them), the organizers widely shared links to these sites through a network of fake accounts on Facebook, Instagram, Twitter, and Telegram, and also promoted them through paid advertising.

Meta described this operation as a combination of technically complex elements (imitating media websites in seven languages required significant resources) and brute force (numerous fake profiles and advertising). This “hybrid” approach allowed the perpetrators to reach a wide audience relatively quickly: instead of spending time on organic follower growth, they immediately flooded the information space with a massive wave of identical messages. Such direct “frontal attacks” on the information environment are aimed more at short-term impact (“hit-and-run”) than at building long-term trust, but they can inflict substantial damage before being neutralized.

1,600

fake accounts and pages coordinating Kremlin war propaganda

Facebook’s ad targeting algorithms became tools for propagandists. Investigations by AI Forensics revealed that pro-Russian groups actively purchased political ads while circumventing oversight. Thousands of such ads were found from anonymous pages pushing Kremlin-aligned messaging in EU countries. Over 65% of this political content lacked mandatory labeling — meaning Facebook failed to mark it as political or disclose the sponsor. This points to vulnerabilities in Facebook’s ad moderation systems. Actors created throwaway pages to publish a single paid post, quickly reaching hundreds of thousands of users before deleting the page. “The page exists solely to pay for the ad, gain immediate reach, and then disappear — this is more effective than trying to grow a page over time,” explains AI Forensics researcher Paul Bouchot.

In addition, targeted advertising was used not only to inject ideas but also as an analytics tool: Russian operators monitored which messages resonated most, tracking user reactions in real time. One of the documents created by pro-Kremlin political strategist Ilya Gambashidze as part of the disinformation campaign known as the Good Old USA Project explicitly states: “Facebook targeting allows you to track audience reactions to distributed materials and adjust subsequent launches based on which group was most strongly affected.”

Thus, Facebook’s advertising platform served as a kind of testing ground for propaganda, where adversaries used A/B testing to select the most effective narratives. In the second half of 2022, Meta’s specialists managed to manually identify and neutralize malicious accounts that had not yet been removed by automated systems before the start of a full-scale investigation.

>65%

of political ads ran on Facebook without required labeling

Facebook’s feed algorithm prioritizes content likely to generate strong emotional reactions — anger, outrage, fear — to increase user engagement. Propagandists exploited this by posting highly provocative messages and images designed to spark conflict. The more users commented or shared (even in outrage), the more the algorithm promoted the post. For pro-Russian disinformation, this meant that highly polarizing content gained disproportionate visibility. Studies have shown that platforms optimized for engagement unintentionally accelerate the viral spread of lies and conspiracy theories.

Moreover, actors used artificial engagement boosting tactics: bot groups or controlled accounts would immediately like and comment on a target post to “boost” it in algorithmic rankings. In the aforementioned “Good Old USA” plan, it was noted that a “bot network” would moderate top-level discussions in comment sections to steer the narrative. Dozens of coordinated comments would appear under each targeted post, creating the illusion of widespread support or attacking opposing views. Regular users, seeing such “resonance,” might mistakenly believe the propaganda reflects mainstream opinion.

Russian operators created and maintained Facebook communities in advance — sometimes for months — posing as local interest groups — for example, Trump supporter groups in specific states (“Alabama for America the Great,” and similar). These groups were filled with neutral or thematically adjacent content that raised no suspicion. The plan was that at a decisive moment, these “sleeper cells” on the social network would be activated: administrators would abruptly switch to distributing campaign materials, turning groups with already established audiences into tools for influencing public opinion. Court documents in the Gambashidze case, declassified by the U.S. Department of Justice, revealed intentions to create 18 such cells in key U.S. swing states, so that at the critical moment they could work in unison to promote targeted narratives and links.

This tactic relies on Facebook’s built-in recommendation mechanisms: people in such groups begin sharing materials that can then spread through their friends’ feeds, while the algorithms may recommend joining popular groups to friends with similar interests, thereby increasing reach.

18 per state

“sleeper cells” were planned in every key U.S. swing state

Although this report focuses on Facebook, many operations were in fact cross-platform. Fake content first appeared on other platforms — for instance, AI-generated videos on YouTube or alleged “leaks” on Telegram — before being shared on Facebook by ordinary users. This way, propagandists leveraged the entire social media ecosystem: even if one platform banned their accounts, they still achieved reach via another. In many cases, Facebook became a secondary amplifier of disinformation that originated elsewhere — especially when it was shared by real users who had been misled. For example, fake videos of “Ukrainian soldiers threatening Trump” were originally disseminated through pro-Russian channels and then picked up by U.S. conspiracy groups on Facebook — vastly increasing their reach.

In this way, the Russian propaganda network on Facebook constitutes a complex structure of hundreds of fake accounts and pages acting in coordination to spread Kremlin-aligned narratives. These accounts, many of which interact with one another artificially, create the illusion of broad support for Russia and its policies, particularly in the context of the ongoing war in Ukraine. They comment on and repost each other’s content to boost engagement scores and maximize reach.

Many of these pages have thousands of followers and regularly post images and videos showcasing Russian military equipment and symbols — including the “Z” symbol associated with Russian armed forces. Pages like Vladimir Poutine Z illustrate how visual materials and national symbols are used to intensify pro-Russian sentiment.

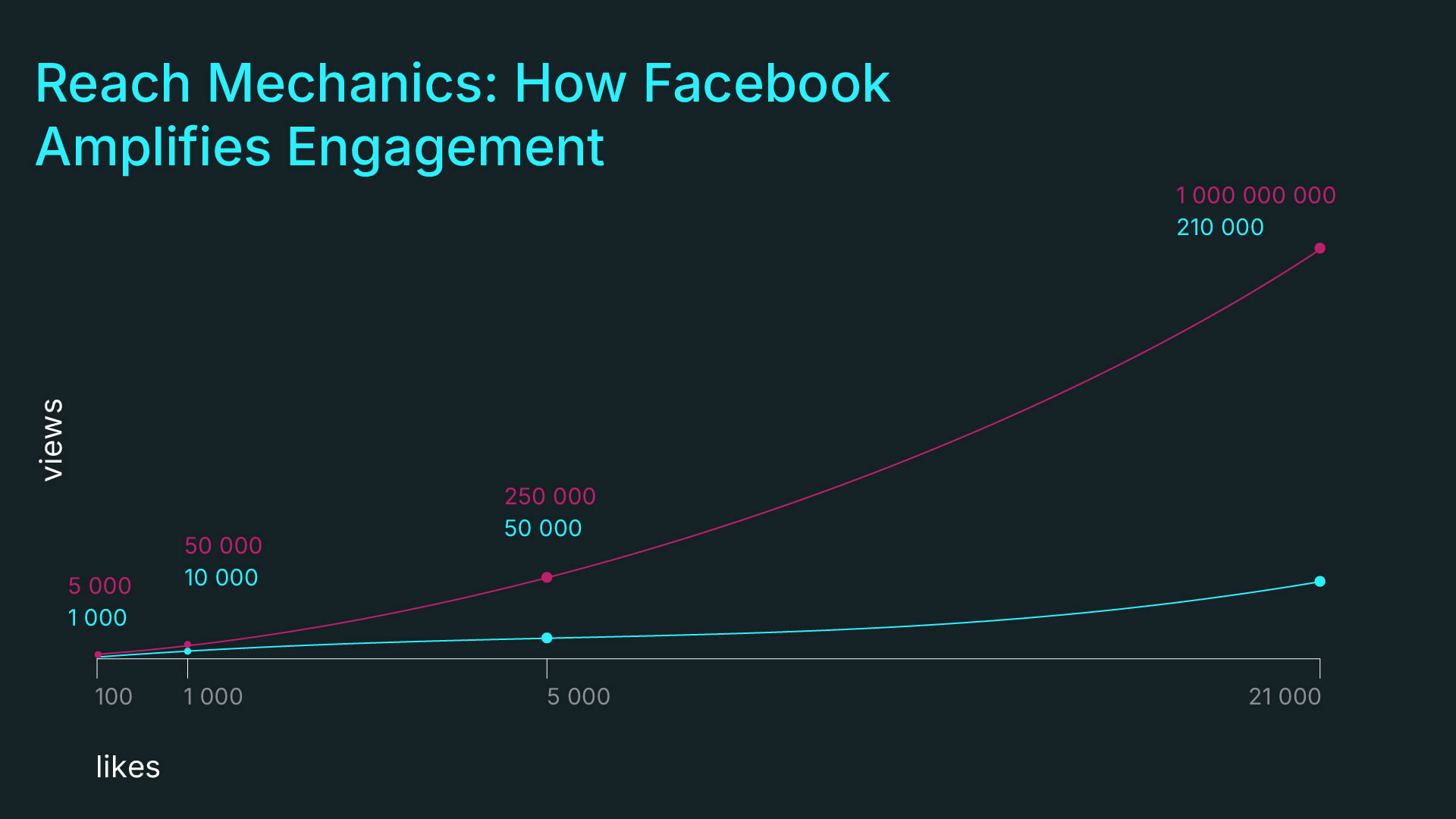

To assess the reach of pro-Russian content on Facebook, we used a method based on engagement metrics (likes, comments, shares) and existing studies — such as the model by Daniel Schneider, Director of Content Marketing at SimilarWeb ["How to Effectively Calculate Engagement Rate"]. According to available data, the average like-to-view ratio on Facebook ranges from 2% to 10%. This allows us to estimate views based on likes: 1 like corresponds to approximately 10–50 views, depending on content type and audience engagement level.

For example, a post with 5,000 likes could reach between 50,000 and 250,000 views. Comment and share rates also play a critical role in increasing visibility.

At the same time, the key factor is not the origin of likes and comments, but their impact on Facebook’s algorithms. High activity under posts — organized in part through fake accounts or by using so-called “troll farms” — causes content to be promoted organically and shown in the feeds of users who are not subscribed to those pages. This enables pro-Russian content to reach a wider audience, bypassing restrictions on advertising and disinformation. The Good Old USA plan, developed as part of Russian information operations, called for the creation and use of a “bot network” to generate and moderate top discussions in comment sections. These bots were intended to steer social media discussions in a direction favorable to Russia, creating the illusion of mass support for the desired narratives. The plan was part of the broader “Doppelganger” campaign and is mentioned in several investigations, including the U.S. Department of Justice report (2024).

One illustrative example is a post featuring an image of a BM-21 “Grad” multiple rocket launcher, which received 21,000 likes, 1,500 comments, and 581 shares. Based on engagement metrics, this post may have reached between 210,000 and 1 million views, even without accounting for views from reshares. These figures show how fake account networks can effectively push content through platform algorithms.

As a result, pro-Russian posts promoted by fake accounts reach a large number of real users, influencing their perception and affecting the platform’s information space.

These pages and accounts primarily publish content in English and target Western audiences, including users in the United States. Their high engagement rates help them reach users’ news feeds even if those users have not subscribed to the pages in question.

During our analysis, we identified dozens of such posts showcasing Russian military equipment and symbols, each with thousands of likes, comments, and shares. This allows us to estimate total reach in the hundreds of thousands, if not millions, of views. Artificially inflated engagement, driven by fake account networks, helps such content spread widely through Facebook’s algorithm — reinforcing pro-Russian narratives in Western information spaces.

Here are some examples of such pages and profiles

50–250K

estimated views for a post with 5,000 likes

Vladimir Poutine Z (page, 120K followers)

Mandira Roy (page devoted entirely to Vladimir Putin, 38K followers)

MFA-Daily Page (23K followers). Currently filled with recipes and food photos to avoid suspicion — but it “activates” during key moments to spread Russian propaganda or campaign materials.

ATF-5 (page, 20K followers). Now appears to be comic-focused, but if you scroll back to U.S. election periods, the content is clearly pro-Russian.

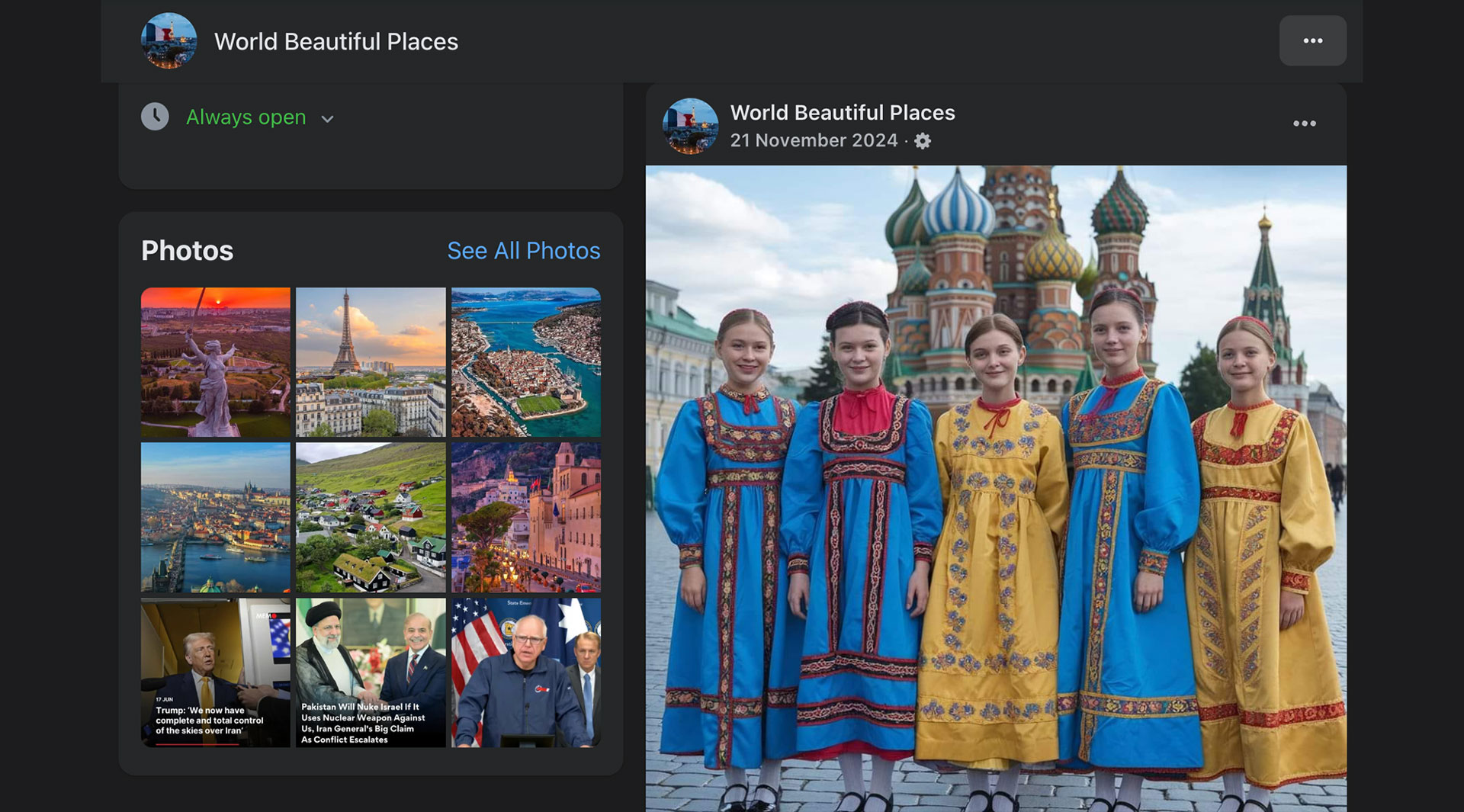

World Beautiful Places (page, 109K followers)

At first glance, this page seems to share content about global landmarks, but scrolling down reveals predominantly pro-Russian propaganda. A clear example: a map of Ukraine labeled “Russia.”

Or images of women in Soviet army uniforms.

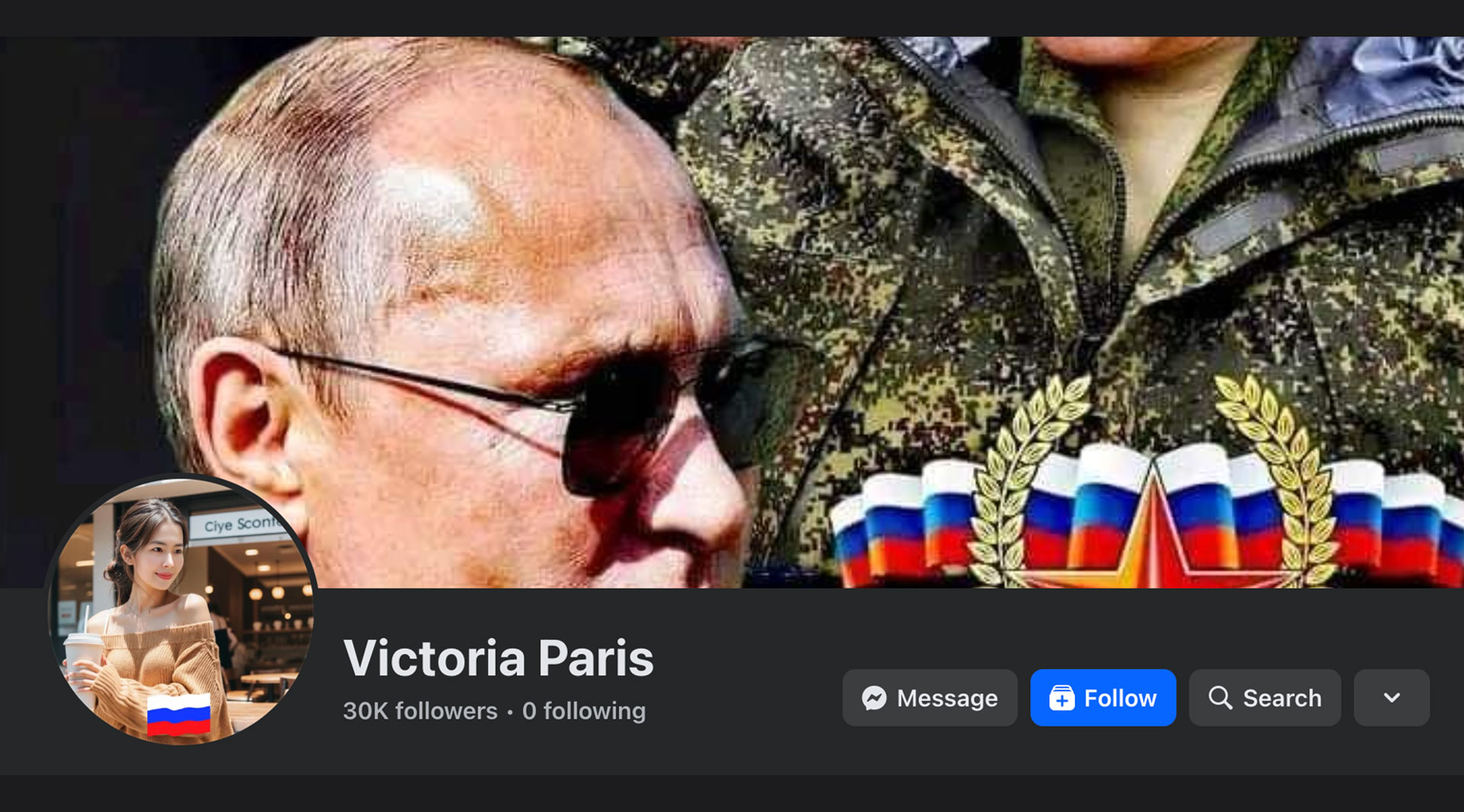

Victoria Paris (page, 24K followers)

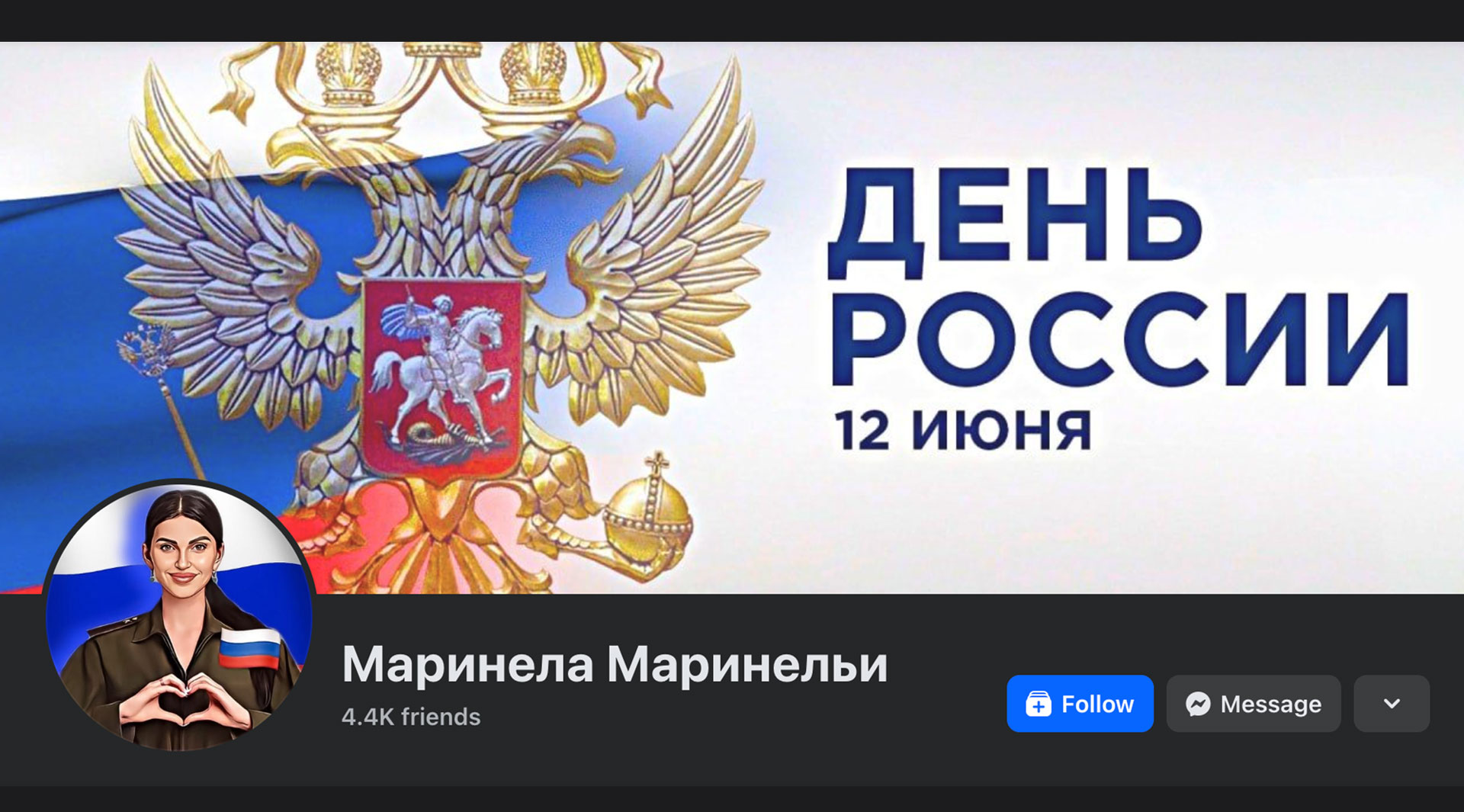

Marinela Marinelli (profile, 8K followers). This profile appears to exist solely to spread Russian propaganda. Several indicators suggest it’s fake, but Facebook rejected complaints and did not consider the profile name fraudulent.

Dozens — possibly hundreds — of similar pages and profiles appear to have been created solely to promote Russian propaganda abroad.

Fake accounts and pages are created to bypass Facebook’s algorithms and reach the largest possible audience. Mutual follows, likes, and comments among these accounts artificially inflate engagement metrics, making the content appear more frequently in users’ news feeds. As a result, posts featuring militaristic imagery and pro-Russian narratives gain significant attention — including among American voters — potentially influencing their political views.

These tactics illustrate the extensive reach and strategic intent behind Russia’s propaganda campaigns on social media, aimed at reshaping Western public opinion regarding support for Ukraine and trust in democratic institutions.

An analysis of profiles and pages promoting pro-Russian content shows that at least half of those commenting on such posts are real users. They behave like typical Facebook users — engaging in discussions, expressing opinions, and participating in comment threads. This confirms that Facebook’s algorithms are successfully circumvented, even if bots or paid users are used at the early stages of promotion.

Further analysis reveals that many of these commenters are based in countries such as the United States, the United Kingdom, Germany, and others. These users actively participate in conversations and share posts with their own followers, increasing the organic reach of the content.

Thus, even when fake activity is used to initiate promotion, the content eventually resonates with real people — making its influence even more significant.

Russia’s propaganda campaign on Facebook and other social platforms, aimed at English-speaking audiences, has indirect but serious implications for European politics — particularly in countries like Germany. The United States, as NATO’s leading partner and a primary supporter of Ukraine, has strong influence over political elites in Germany, the UK, and elsewhere. Russian narratives promoted in the U.S., which call for ending aid to Ukraine or emphasize anti-war rhetoric, may resonate in Europe as well, especially through media “crossflows” and international influence networks.

Key risks for Germany and the EU:

Spread of anti-NATO and anti-Ukrainian sentiment. Pro-Russian messages that gain traction in the U.S. can seep into political discourse in Germany and other European countries. This was especially visible ahead of the German parliamentary elections, where far-right populist parties like the Alternative for Germany (AfD) used anti-war talking points to mobilize voters.

War fatigue among European audiences. Russian narratives targeting English-speaking users may indirectly influence German society via transnational information channels. Since Germany is one of Europe’s top military donors to Ukraine, declining public support could pressure political leaders to reduce aid.

Political destabilization through social groups and migration discourse. Russia has used migration crises to fuel tensions in European countries. This was evident in past election cycles in Germany and Austria, where disinformation about refugees was used to mobilize conservative and far-right electorates.

We submitted multiple complaints to Facebook about pro-Russian content, including militaristic imagery and narratives undermining support for Ukraine. Despite numerous reports, Facebook’s response remained unsatisfactory. The platform rejected all of our complaints, stating that the posts did not violate its community standards.

One reply from Facebook support read: “We didn’t remove the photo. We reviewed it and found that it doesn’t violate our Community Standards.” The message also encouraged users to “use available tools to control what they see” rather than remove problematic content. This and other responses show that Facebook does not treat posts containing the “Z” symbol or imagery of Russian soldiers as violations — despite their propagandistic nature.

Because such complaints are dismissed, Facebook’s algorithm continues to reward high engagement with these posts — allowing them to spread further. Pro-Russian pages and fake accounts that interact with each other receive additional organic reach, putting this content in the feeds of users who haven’t followed the pages. This artificially inflated engagement score helps pro-Russian propaganda reach wide audiences.

The lack of adequate moderation for such posts may be linked to challenges in handling international content and the ambiguity of the rules. Since pro-Russian pages target an international audience, moderation algorithms do not always flag them as violating standards, especially if they avoid using explicit markers of disinformation. This gap allows pro-Russian narratives to spread freely despite numerous complaints from researchers and activists.

Facebook’s inadequate response leaves the platform vulnerable to foreign information operations that undermine support for Ukraine and promote pro-Russian sentiment among Western audiences. Without robust oversight and moderation, such content will continue to shape public perception of global affairs.

Given its extensive reach, Russia’s disinformation campaign on social media has a significant impact on public opinion in the U.S. and other Western countries. Through visual cues, symbols, and militaristic narratives — such as the “Z” symbol and imagery of Russian military equipment — Russian propaganda fosters a favorable image of the Russian armed forces and reinforces Kremlin policies. This content spreads via hundreds of fake accounts and pages that exploit Facebook’s algorithm to reach broad audiences, including users who never followed such pages.

According to a report by the U.S. Senate Intelligence Committee, Russia actively uses social media to promote militaristic propaganda, project strength, and appeal to Western audiences. Military imagery, such as tanks and missiles, creates a compelling narrative for patriotic users and helps mobilize pro-Russian sentiment — especially in the context of the war in Ukraine.

Other reports, such as the analysis by the Center for Strategic and International Studies (CSIS), highlight how Russian operations use bots and fake accounts to simulate public support. CSIS described how anti-Ukrainian narratives were spread using AI and automated accounts, creating the illusion of mass approval among U.S. users.

An in-depth study by our project on the role of social media in political propaganda also shows that social networks have become a key channel for spreading political propaganda, largely pushing traditional media into the background. Around two-thirds of the world’s population use social media, and more than half of adults — for example, in the United States — regularly get their news from these platforms. Platform algorithms (Facebook, Twitter/X, TikTok, Telegram, and others) facilitate the viral spread of content — research shows that false news travels faster on social networks than truthful information. Mechanisms such as recommendation feeds and interest-based groups create “echo chambers,” where users are exposed predominantly to information that reinforces their existing views.

Under these conditions, social media have become an extremely powerful tool for shaping public opinion — a fact actively exploited, including for state propaganda. They have turned into the most potent means of influencing minds, facilitating the spread of both information and disinformation.

The spread of pro-Russian narratives also contributes to “war fatigue” among Western audiences. Continuous exposure to posts showcasing the Russian military or presenting a positive image of Russia can create a false sense of legitimacy and strength — weakening support for Ukraine. This may lead Western societies, especially in the U.S., to question the rationale for continued support — ultimately weakening the international position of democratic allies and reducing financial and military aid to Ukraine.

Russian disinformation campaigns also undermine trust in Western democratic institutions, portraying them as incapable of adequately responding to information threats and protecting their citizens from external influence. According to a study by the Georgetown Journal of International Affairs (GJIA), Putin’s propaganda often focuses on portraying Western governments as ineffective and support for Ukraine as unnecessary.

Russian propaganda fosters a negative perception of Western institutions, which may influence — and perhaps is already influencing — the political preferences of American voters and contribute to strengthening pro-Russian sentiment within the United States.

The question of how to regulate the spread of foreign propaganda on American platforms is complex, as it requires balancing the protection of free speech with national security. In the case of Russian propaganda disseminated through Facebook, there is a possibility that pages promoting pro-Russian narratives may violate both U.S. laws and certain regulatory requirements governing specific business practices. For example, the Federal Trade Commission (FTC) and the U.S. Securities and Exchange Commission (SEC) have rules aimed at protecting users, ensuring transparency in business practices, and preventing manipulation.

Combating Russian propaganda on Facebook requires a comprehensive approach. By using fake accounts and pages, Russian information campaigns successfully bypass the platform’s algorithms, creating the illusion of broad support for pro-Russian narratives among Western audiences.

The Need for Improved Algorithms and Content Moderation

To effectively counter foreign propaganda, platforms need transparent and advanced algorithms capable of identifying and limiting content that artificially inflates engagement via networks of fake accounts. Facebook and other social media companies must consider improving moderation mechanisms to prevent such content from reaching wide audiences. Transparent complaint procedures and timely responses will also help users feel protected from disinformation. Unfortunately, repeated efforts to get meaningful responses from Facebook’s moderation team have failed.

Instead, Facebook’s automated systems unfairly impose temporary restrictions on accounts that publish truthful content about Russian military activity in Ukraine — or even memes comparing Adolf Hitler to Vladimir Putin (which happened in my case, without any response to the appeal). It feels as if the platform enables propaganda while taking no action against the thousands of fake accounts and pages created with the sole purpose of influencing voters in the U.S. and Europe.

A comprehensive solution to this problem requires joint efforts by U.S. government agencies, social media platforms, and civil society. Lawmakers and regulators could consider additional measures to oversee platform activities and their algorithms, especially when it comes to the spread of Russian propaganda that may influence the country’s democratic processes.

In the context of information warfare and international aggression, it is important not only to respond to individual incidents but also to implement long-term strategies to protect Western publics from manipulation of public opinion. Transparent rules and effective moderation will help a social platform like Facebook remain a safe and trustworthy environment for users.

Sources and References:

CSIS Report (Center for Strategic and International Studies)

MOST Media & News Research: “The Role of Social Media in Political Propaganda”

SimilarWeb: Engagement Rate Analysis Method

POLITICO.EU: Russian Influence Operation on Facebook

About.FB.COM: Meta’s Reports on Fake Russian Networks

Georgetown Journal of International Affairs (GJIA): Russian Propaganda’s Effect on Western Views of the War in Ukraine.

Source: GJIA Report

DOJ: Russia Aimed Propaganda at Gamers, Minorities to Influence the 2024 Election

AI Forensics: Analysis of Disposable Pages and Targeted Ad Disinformation

Global Business Outlook: Scope of Political Advertising and Labeling Gaps